Application Delivery Service Challenges in Microservices-Based Applications

Microservices-based application architectures have ushered in a new era of simplicity in deploying and managing complex application system infrastructure and scaling them almost limitlessly based on traffic demands.

But in doing so they have also brought in new complexities and challenges that need to be adequately addressed for IT administrators, DevOps and application owners. The end result is that the application delivery services for these modern application architectures have to be provided in a fundamentally new way.

Evolving Application Architectures

Over the last two decades or so three main trends have changed the way applications are put together and delivered to consumers.

First, the popularity of the web browser and high speed internet as a delivery mechanism led to clients using of applications via web browsers and eventually through apps on mobile devices, while moving away from a desktop model.

This naturally led to a SaaS-based business and operational model providing immense economies of scale. Rapid changes to software became a strategy to maintain competitive edge, which gave rise to the need for continuous integration and continuous deployment.

Second, virtualization, cloud and software-defined infrastructure brought deploying and putting together complex webscale systems into the realm of software programming and thereby brought in a series of tools and methodologies that make it simple to rapidly create such application infrastructures. This has given birth to the DevOps culture, a cross between operations and software development, empowering departments and individual app owners to control the specific services they need from the infrastructure without having to wait for an IT approval cycle.

A third, and the most consequential trend, facilitated by automating many of the time consuming chores of deploying and interconnecting server clusters efficiently, led to a move away from a large, “hard to debug and maintain” monolithic mainframe like application architectures into larger numbers of more manageable, easy to debug, distributed microservices.

Microservices are small, self-contained, independently deployable and scalable processes; often using independent technology stacks and OSs that communicate with other services or clients using lightweight communication and asynchronous protocols, usually REST APIs with JSON payloads and pub-sub message queuing. Logic is separated from state. State is stored in multiple places, distributed and synchronized to be highly resilient. This has given rise to a service economy where businesses expose APIs to data and processing services for others to make these part of a different application composed out of developers’ own (micro)services as well as other third-party services.

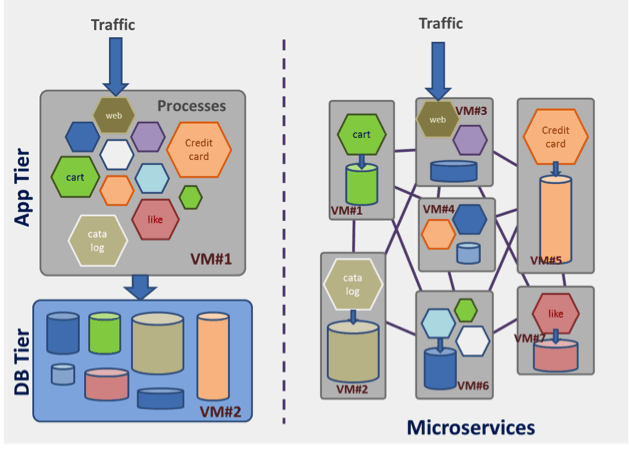

A visual comparison: monolithic vs. microservices application architectures

Side Effects of the Microservices Trend

On the flip side, applications that are based on traditional approaches run on fewer VMs compared to the same application when divided into microservices. By moving to microservices, a typical app with just three servers–one for PHP, one for Java and one for database–could potentially have 30 services on perhaps a 12-node cluster. The dynamic scalability of the servers based on traffic loads and replication for service availability adds more VMs. Docker containers and cluster managers, and data center OS are addressing this challenge.

As a consequence there is both an increase in API service traffic over the web as well as a tremendous increase in east-west traffic compared to north-south within a traditional data center. Amazon.com typically uses between 100 and 150 APIs for rendering any of its pages. At Netflix, microservices make 5 billion API requests per day, of which 99.7 percent are internal, according to data from 2014. Similarly, salesforce.com’s mid-tier API traffic makes up over 60 percent of the traffic serviced by its application servers, 2013 data notes.

Interactions between microservices as one invokes another creates traffic-related dynamic dependencies which can be much more complex than explicitly visible microservice dependencies derived from networking parameters or references in code. Whenever issues arise, it becomes critical to understand the order in which the services were invoked for that specific transaction. Documenting and/or visualizing the fluid application topology is not straightforward.

Speed of software upgrades provides another challenge. As smaller microservices proliferate with different developer ownership and with continuous deployment methodologies being used, even when the rate of change is bi-weekly, the application as a whole could be changing at a much faster rate. It is well known that Amazon, Github, Facebook and others deploy production code multiple times a day. The challenge therefore is to automate the switching of production traffic from an older to a newer release of the product. In fact one has to work with the lifecycle of these microservices, which can have a small lifespan for handling transient loads and one time functional needs. AWS Lambda, for example, can create ephemeral services lasting 200ms!

The more distributed and interconnected an application is, the more the need to keep a good tab on monitoring. Metrics, analysis, controller actions and automation are the lifeblood for maintaining such a system. Note that an OS can easily generate over 100 metrics and every app server instance can generate 50-plus metrics every second. This means a typical deployment can easily generate thousands of metrics every second. Any infrastructure to collect, cleanse, collate, correlate and produce actionable controls from these metrics needs to have abstractions and identifiers, attributions needed to make sense of the data.

Consequences for Application Delivery Services

Traditionally, application delivery has been about managing operational aspects of an application deployed inside a data center and provide reliable access to any client to any application from anywhere in the world. It typically provides services such as load balancing, traffic management and security. On virtual and cloud infrastructures they are available as pure software images. However, its view of application and traffic flow patterns are traditional and centered on monolithic three-tiered architectures serving clients primarily outside the datacenter and modeled on a traditional appliance form factor.

The microservice phenomenon has proliferated aggressively and is empowering application owners to take more control of their own application delivery services, typically using open source tools like NGINX and HAProxy, rather than using an edge-based application delivery controllers (ADCs). The central IT team has usually been focused on the edge devices and managing traffic that’s coming in or going out and less on the north-south mid-tier traffic mostly because of existing architectures, cost and complexity. This trend to use OSS disintermediates central IT, leading to the emergence of a “shadow IT” groups with their own ad hoc policies.

Application delivery services in this context require a fundamental rethink. It needs an architecture that is highly distributed and scales with infrastructure that operates continuously under dynamic changes that need to be discovered in real time by all stake holders. Traffic management must include the ability to support upgrades with automatic traffic transition and roll back. Metrics must be collected from diverse instances and correlated on many dimensions based on how application microservices are defined. The following are some of the key challenges application delivery services for microservices face:

- Handling interconnected dynamical services dependency

- Distributed traffic management

- Distributed security

- Operational intelligence

- Troubleshooting and application level visibility and analytics

- Handling effects of failures in dynamic topologies for both hard and soft failures

The first challenging act of any such app delivery service is to be inserted into a dynamic and distributed app topology. It must orchestrate insertion of the service as a cluster of proxies and stitch them to the infrastructure and networking artifacts such as security groups, scaling groups, etc. And given the transient nature of physical resources it has to be based on declaratively describing the application, operationally discovering the topology and the networking parameters as deployed and be able to modify it.

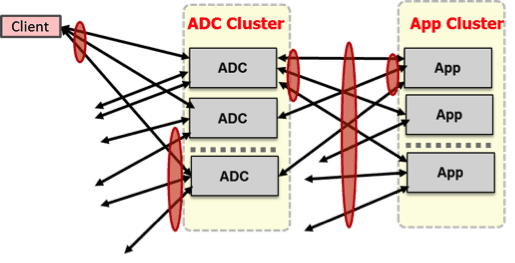

An important point that traditional software ADCs miss is the need to correlate traffic and load across a distributed cluster of service delivery proxies to properly control the system. The diagram below illustrates this point where an app server instance can receive traffic from many ADCs, and on the other hand an ADC instance is forwarding traffic to many app servers. This means that each ADC must take into account what other ADCs are doing to a specific app server.

Architecting for Clusters of ADCs and Application Microservices

The challenge then becomes how to have an invariant view of an app and its traffic while basic resources are dynamic and also distributed. To address this we need to work on methods of discovery of these relationships via a registry and notification-based strategies followed by powerful visualization for the system operator. We also need a way to apply policies on the abstract view of the app. Containerized microservices create their own networking-related challenges that these systems must address.

Distributed systems have many points of failures with intricate dependencies and some will invariably fail. Due to the asynchronous and dynamically changing interconnections and interactions between many different entities it can be difficult to zero in on hard-to-find failures. Failures can be both “soft” or transient as well as “hard” or final. Worse, simple failures in one component can sometimes trigger a cascade of failures across the interdependent microservices of the app infrastructure. Hard failure recovery must be done dynamically and rapidly – needing signaling of impending failure as well as recovery of failed instances in a timely manner.

The promise of microservices is profound and can lead to significant application agility and improved time to market. Traditional architectures need to rapidly evolve and application delivery mechanisms need to keep in step. In short, application services for the microservices age merits fresh thinking and has to be re-architected for changing needs.

Seeing is believing.

Schedule a live demo today.