What Is Kubernetes (K8s) for Containerized Applications?

Leveraging Kubernetes and Load Balancing Kubernetes Application Traffic to Unlock the Full Value of Cloud-native Apps

Kubernetes is a core technology for containerization, in which applications are packaged and isolated to allow greater scalability and portability. Also known as K8s, Kubernetes simplifies and automates the deployment, scaling, and management of containerized applications, which can consist of a large number of distributed containers, so that they can be orchestrated more easily across multi-cloud and hybrid-cloud environments.

Originally created as an internal project by Google, Kubernetes is now an open source project hosted by the Cloud Native Computing Foundation (CNCF).

As containerized applications become a mainstay of DevOps, continuous integration/continuous delivery (CI/CD), and cloud-native development, Kubernetes has gained widespread adoption by enabling efficient scalability, effortless workload portability, and simpler ways to manage an increasingly complex ecosystem of distributed, dynamic, and ephemeral resources.

What Are Containerized Applications, and Why Are They So Popular?

In simple terms, application containerization is a highly efficient and portable form of application virtualization. Rather than launching an entire virtual machine for each application, complete with operating system (OS), organizations can run several containers on a single host, sharing the same OS kernel.

Working on bare-metal systems, cloud instances, and VMs across a variety of operating systems, containers make more efficient use of memory, CPU, and storage than traditional virtualized or physical applications. Combined with their smaller size, this makes it possible to support many more application containers on the same infrastructure. Containers can be reproduced easily, with their file systems, binaries, and other information remaining the same across the DevOps lifecycle, and a given container can run on any system, in any cloud or on-prem, without the need to modify code or manage OS-specific library dependencies. These characteristics make application containerization ideally suited for the speed, flexibility, and portability demanded by digital business.

What Is Kubernetes Actually Used For?

To realize the benefits of application containerization, organizations need to be able to first containerize applications, and then orchestrate them effectively across their infrastructure. For the former, they rely on an application containerization technology such as Docker, containerd, CRI-O, or runC. For the latter, they most often turn to Kubernetes.

As a container orchestration system, Kubernetes groups the containers that make up an application into logical units so that they can be discovered and managed more easily at a massive scale. Integrated with the container runtime via the Container Runtime Interface (CRI), K8s makes it possible to automate day-to-day management for containerized applications, the abstraction of related resources, and continuous health checks to ensure service availability. In this way, organizations can build and manage cloud-native microservices-based applications more quickly, deploy and move applications across multiple environments more easily, and scale applications more efficiently.

For DevOps teams, K8s provides a common platform for deploying applications across different cloud environments, abstracting the intricacies of underlying cloud infrastructure so they can focus fully on higher-value coding. Organizations gain the flexibility to deploy applications in the cloud that best meets the needs of customers while optimizing costs.

Load Balancing Kubernetes Application Traffic for Best Results

Just as traditional applications rely on application load balancers to ensure reliability, availability, and performance across a multi-server environment, a cloud environment calls for a cloud load balancer to distribute workloads across an organization’s cloud resources. Load balancing Kubernetes application traffic is important to deliver an optimal experience for users. The basic premise is similar: application load balancers ensure that no single server bears too much demand, a cloud load balancer balances network traffic across clouds, and load balancing traffic to Kubernetes applications makes it possible to distribute demand evenly across the Kubernetes pods that make up a service. There are a few options for this:

- NodePort: With this simple approach for small clusters with only basic routing rules, a port is allocated on each node (known as NodePort) to allow users to access the application at the node’s IP address and port value.

- LoadBalancer: Supporting multiple protocols and ports per service, LoadBalancer can be thought of as a more advanced version of NodePort. Here, a port is allocated on each node and connected to an external load balancer. This option requires integration with the underlying cloud provider infrastructure and is typically used with public cloud providers that have such an integration, a factor that can complicate cross-provider portability. In addition, the default assignment of an external IP address to each service, each with its own external load balancer in the cloud, can quickly bloat costs.

- Ingress Controller: K8s defines an Ingress Controller that can be used to route HTTP and HTTPS traffic to applications running inside the cluster. While an Ingress Controller can both expose pods to external traffic and distribute network traffic to services according to load balancing algorithms, it does not entirely eliminate the need for an external load balancer. Because each public cloud provider has its own Ingress Controller that works in conjunction with its own load balancer, a multi-provider cloud strategy will call for an external load balancer to bridge these environments and deliver a cloud agnostic K8s environment.

As organizations consider their options for load balancing Kubernetes application traffic, they should seek a solution that is cloud-agnostic across both public and private clouds, supports dynamic traffic management configuration as pods are create and scaled, and integrates easily with existing DevOps tools. As with traditional application load balancers, the solution should also provide centralized visibility and analytics for proactive troubleshooting and fast root cause analysis.

How A10 Networks Supports Kubernetes

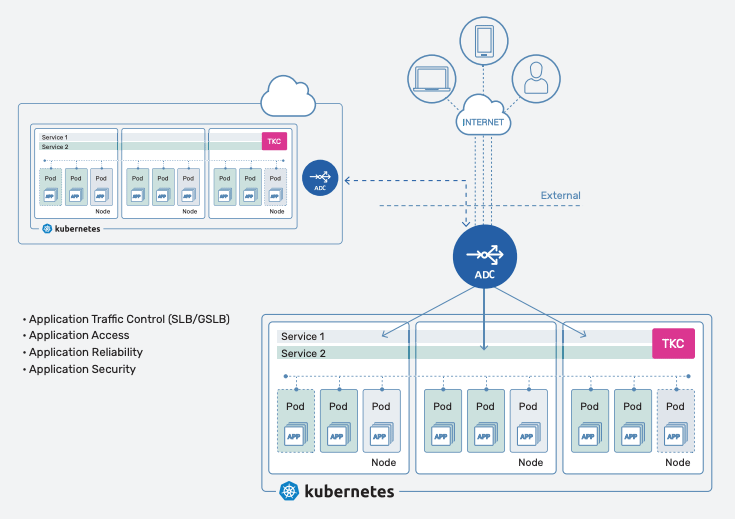

A10 Networks supports Kubernetes through a range of solutions. Thunder® Application Delivery Controller (ADC), available in a broad array of form factors (physical, virtual, bare metal, container and cloud instances), optimizes the delivery and security of container-based cloud-native applications and services running over public clouds or private clouds.

With Thunder Kubernetes Connector (TKC), A10 Networks provides an easy and automated way to discover the services running within a Kubernetes cluster, and configure the Thunder ADC to load-balance traffic for Kubernetes Pods as they start, stop or change nodes. The TKC runs as a container inside the Kubernetes cluster, works with any form factor of Thunder ADC, and seamlessly fits into CI/CD release cycle.

External access to Kubernetes applications in multi/hybrid cloud with A10 TKC and Thunder ADC

The following video demos how Thunder ADC, plus Thunder Kubernetes Connector (TKC) provides access to applications running in a Kubernetes K8s cluster.

Related Resources

- Advanced Application Access for Kubernetes (Webinar)

- Requirements of Optimized Traffic Flow and Security in Kubernetes (Webinar)

- 4 Key Considerations for Advanced Load Balancing and Traffic Insights for Kubernetes (Webinar)

- The Top 7 Requirements for Optimized Traffic Flow and Security in Kubernetes (Blog Post)

- How to Deploy an Ingress Controller in Azure Kubernetes (Blog Post)

- Digital Resiliency Needs Modern Application Delivery (Blog Post)

- DevOps/SecOps Tools for Multi-cloud Application Service Automation (Blog Post)

- Hybrid Cloud and Multi-Cloud Polynimbus Policy Enforcement (Blog Post)

- The Top 7 Requirements for Optimized Traffic Flow and Security in Kubernetes (Blog Post)

- How do you Load Balance in a Hybrid or Multi-cloud World? (Blog Post)

- What is a Multi-cloud Environment? (Glossary Post)

- What is a Hybrid Cloud? (Glossary Post)

- What are Containers and why do we need them? (Video)