What is Server Load Balancing (SLB)?

Server load balancing (SLB) is a data center architecture that distributes network traffic evenly across a group of servers. The distributed workloads ensure application availability, scale-out of server resources and health management of server and application systems.

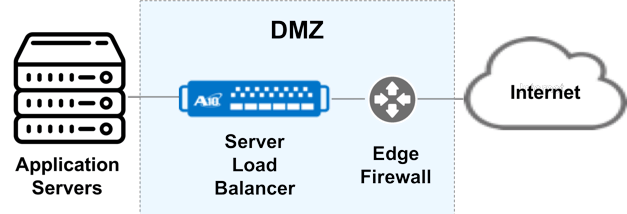

Server Load Balancer systems are often located between the Internet edge routers or firewalls inside the DMZ security zone and the Internet facing application servers.

Server Load Balancer Typical Configuration

In this configuration, the SLB systems act as a reverse proxy, presenting the hosted services to remote network clients. Remote clients over the Internet connect to the SLB system which masquerades as a single application server, then forwards a connection the optimal application server.

Application Delivery Controllers

Server Load Balancing (SLB) products have evolved to provide additional services and features and are now called Application Delivery Controllers (ADCs). ADCs consist of traditional Server Load Balancing features, as well as Application Acceleration, Security Firewalls, SSL Offload, Traffic Steering and other technologies in a single platform.

Server Load Balancing Benefits

Scalability

Servers host application level services like business applications and network services like firewalls and DNS services. The load on application servers is ever increasing including network throughput requirements as well as CPU, memory and other server resources. Each server has a limit on the amount of workload resources that can be supported. To increase server capacity, adding additional server systems is required. In a load balanced configuration, additional servers can be added dynamically to increase capacity. Server capacity can be added live without affecting the existing systems.

Reliability

Load balancing for application servers is common to provide highly-available application infrastructures. When multiple servers are load balanced, any single failure does not cause serious outages. User sessions which were served by the failed server are routed to other firewall systems and user sessions are re-established.

Manageability

Server maintenance is difficult in non-load balanced environments. Changing configurations on live systems can easily cause unforeseen issues and outages. Systems behind a server load balancer can be removed from service without user disruption, and either upgraded, replaced or updated with new configurations. These systems can be tested by operations before returning to an operational state.

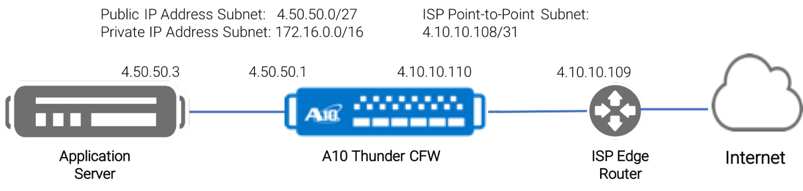

Application Service A10 Thunder CFW

Load Balancing Algorithms

There are a variety of methods that dictate how back-end servers are selected by the load balancing device. Some of the algorithms or criteria for selecting servers include:

- Round robin – each connection is passed to the next server

- Least connections – The server currently with the least number of network connections

- Fastest response – Each server application response is monitored, and the back-end server responding the fastest is selected

- Server health – Server health is monitored with various techniques and the healthiest server is selected

- Server loading – Server loading is monitored with various techniques and the least loaded server is selected

- Traffic Steering – Traffic Steering and Network Control policies are used to calculate/select the back-end server

- Custom Scripting – Advanced SLB products have technologies to parse packets and forward products based on the customer provided logic or scripts.

How A10 Networks Can Help

A10 Networks Thunder family of Application Delivery Controllers provide a broad and advanced set of features and are deployed in most of the world’s largest carrier and service provider networks.

Server Load Balancer features include:

- High-performance, template-based, Layer 7 URL and URL hash switching

- Header, URL and domain manipulation

- Comprehensive Layer 7 application persistence support

- aFleX technology for deep packet inspection and traffic manipulation

- Weighted Round Robin

- Weighted Least Connections

- Fastest Response

- Comprehensive protocol support – ICMP, TCP, UDP, HTTP, HTTPS, FTP, RTSP, SMTP, POP3, SNMP, DNS, RADIUS, LDAP, SIP

- TCL scriptable health check support

Additional Resources

- Learn how to scale, manage, and optimize your applications with a SLB. Read our solution brief “Get More from Your Enterprise Network”.